Unlocking the Power of Embedding Models: A Comprehensive Guide

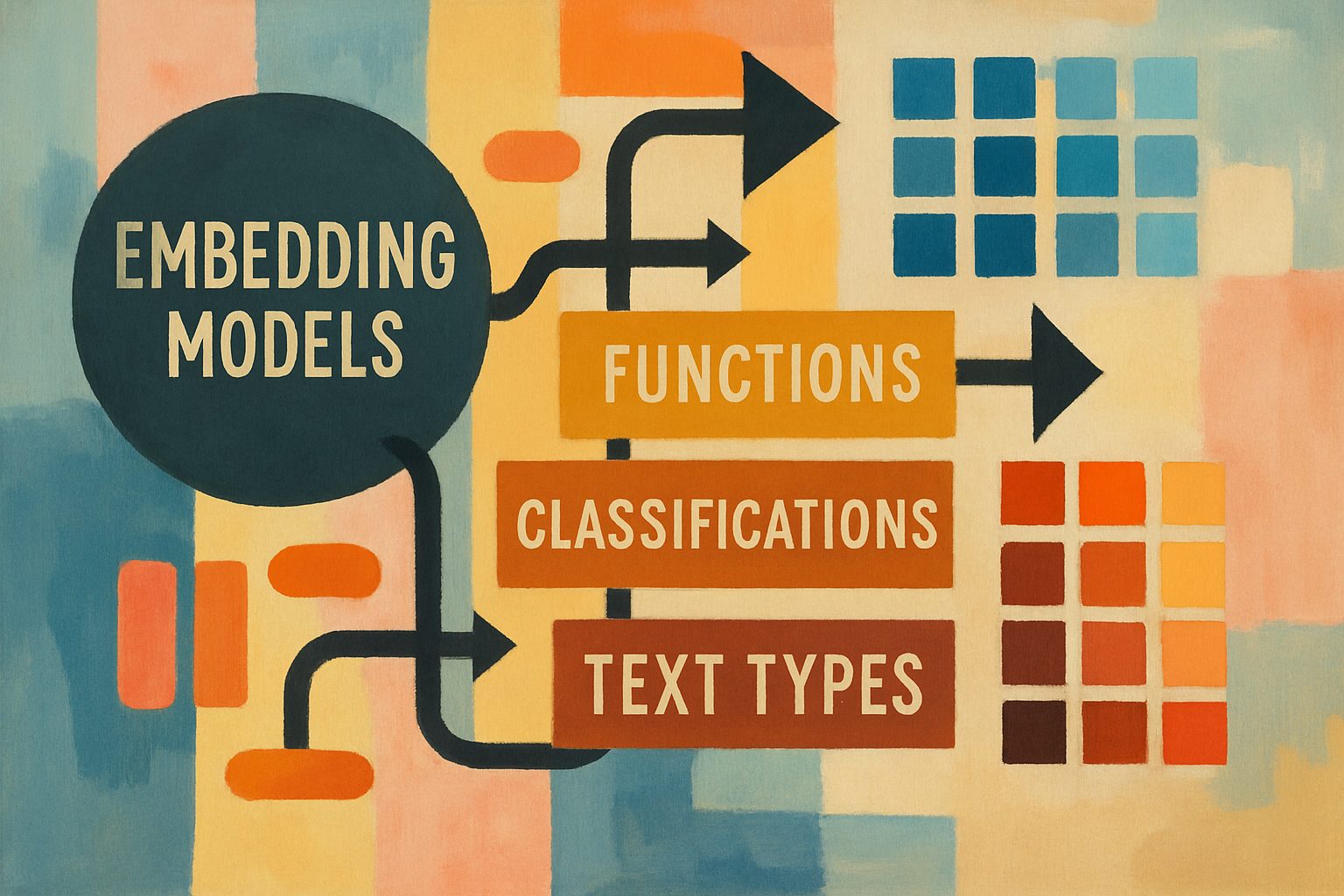

Dive into the fascinating world of embedding models, where we unravel their functions and classifications. Discover which models are best suited for different text types and the reasons behind their effectiveness.

This comprehensive analysis explores Compare the various embedding models and explain their functions, the different classes of embeddings, and specifically which embeddings are suitable for different types of texts. Illustrate this relationship and explain the underlying reasons., based on extensive research and multiple data sources.

Embedding models are foundational in modern machine learning and natural language processing (NLP), enabling the transformation of high-dimensional and complex data into lower-dimensional vectors that preserve semantic relationships. This comprehensive exploration will categorize and describe various embedding models, weighing their advantages and limitations to foster a deeper understanding of their applications.

Thesis Statement

The evolution of embedding models has significantly enhanced the ability of machine learning systems to process and understand complex data, with diverse types tailored for specific applications ranging from text to images and graphs.

Types of Embedding Models

1. Word Embeddings

Word embeddings represent words as dense vectors in a continuous vector space, capturing semantic meanings based on their context. This model addresses the limitations of traditional methods like one-hot encoding.

- Key Models:

- Word2Vec: Developed by Google, it utilizes shallow neural networks through two approaches:

- Continuous Bag of Words (CBOW): Predicts a target word given its context.

- Skip-Gram: Predicts context words given a target word.

- Example: The vector arithmetic

king - man + woman ≈ queenillustrates its effectiveness in capturing relationships.

- GloVe (Global Vectors for Word Representation): A count-based model that leverages word co-occurrence statistics to generate embeddings, effectively capturing both local and global contexts. It represents words like “apple” and “fruit” in proximity in the vector space due to their co-occurrence.

2. Sentence Embeddings

Sentence embeddings extend the concept of word embeddings to entire sentences, capturing the overall meaning and context.

- Key Models:

- Universal Sentence Encoder: Designed to create embeddings for sentences, it is useful for a variety of tasks such as semantic similarity, clustering, and classification.

- Sentence-BERT: A modification of BERT for semantic textual similarity, providing embeddings that can be easily compared.

3. Document Embeddings

Document embeddings represent entire documents as vectors, summarizing the content and context of longer texts.

- Key Models:

- Doc2Vec: An extension of Word2Vec that associates a unique vector with each document, allowing for the representation of documents in the same vector space as words.

4. Image Embeddings

Image embeddings convert images into vectors, enabling tasks like image classification and retrieval.

- Key Models:

- Convolutional Neural Networks (CNNs): Typically used for generating image embeddings by transforming pixel data into representative vectors.

- CLIP (Contrastive Language–Image Pretraining): A model that learns to associate images with text descriptions, creating embeddings that can relate visual and textual information.

5. Graph Embeddings

Graph embeddings translate nodes and their relationships within graphs into vectors, capturing structural information.

- Key Models:

- DeepWalk: Utilizes random walks on graphs to learn node embeddings that preserve neighborhood information.

- Node2Vec: A variation of DeepWalk that allows for more flexible exploration of neighborhoods.

6. Multimodal Embeddings

These embeddings integrate multiple types of data (text, images, audio) into a single representation, facilitating cross-modal tasks.

- Key Models:

- CLIP: By aligning text and images in the same embedding space, it enables tasks like zero-shot image classification.

- GPT-4 Vision: Incorporates visual input alongside text, showcasing the potential of multimodal embeddings in advanced AI models.

7. Contextual Embeddings

Contextual embeddings consider the surrounding context of words, generating different embeddings for the same word based on its usage.

- Key Models:

- ELMo (Embeddings from Language Models): Creates context-sensitive embeddings by considering all words in a sentence.

- BERT (Bidirectional Encoder Representations from Transformers): A transformer-based model that generates embeddings by attending to the entire context of a word.

8. Instruction-Tuned Embeddings

These are specialized embeddings designed to follow specific instructions for better performance in task-oriented applications.

- Key Models:

- Gemini: A model that focuses on instruction-following tasks, showing improvements in performance compared to traditional embeddings.

Comparative Analysis of Embedding Models

| Embedding Type | Example Models | Key Features | Applications |

|---|---|---|---|

| Word Embeddings | Word2Vec, GloVe | Capture semantic relationships | NLP tasks, sentiment analysis |

| Sentence Embeddings | Universal Sentence Encoder | Represent entire sentences | Semantic similarity, classification |

| Document Embeddings | Doc2Vec | Capture |

Thesis Statement

Embeddings are a crucial component in machine learning, particularly in natural language processing (NLP) and computer vision. They effectively transform high-dimensional data into lower-dimensional representations while preserving semantic relationships. This document categorizes the various classes of embeddings, exploring their functions and applications.

Overview of Embeddings

Embeddings represent data points in a continuous vector space, where similar items are located closer together. This technique enhances the ability of machine learning models to process and interpret complex data, ranging from text to images. Below are the primary classes of embeddings:

1. Word Embeddings

Definition: Word embeddings represent individual words as dense vectors in a high-dimensional space.

Examples: Popular models include Word2Vec, GloVe, and FastText.

Function:

– Capture semantic meanings and relationships between words.

– Facilitate various NLP tasks such as text classification, sentiment analysis, and machine translation.

Key Characteristics:

– Semantic Relationships: Words with similar meanings are positioned closely. For example, the vector for “king” is closer to “queen” than to “car” source.

– Vector Arithmetic: Allows operations like king - man + woman ≈ queen, showcasing the model’s understanding of gender relationships source.

2. Sentence Embeddings

Definition: Sentence embeddings provide vector representations for entire sentences, capturing their overall meaning.

Examples: Models like Universal Sentence Encoder and Sentence-BERT.

Function:

– Enable tasks that require understanding the semantic content of sentences, such as summarization and semantic search.

– Facilitate more accurate classification of documents by considering the context of multiple words.

Key Characteristics:

– Contextual Understanding: Unlike word embeddings, sentence embeddings consider inter-word relationships, leading to a more comprehensive representation source.

3. Document Embeddings

Definition: Document embeddings extend the concept of sentence embeddings to larger text units, such as paragraphs or entire documents.

Examples: Doc2Vec.

Function:

– Useful for tasks that involve assessing the similarity between documents or clustering documents based on topics.

– Enhance applications in information retrieval, where documents can be indexed and searched effectively.

Key Characteristics:

– Capturing Context: Similar to sentence embeddings, but focused on more extensive text structures, leading to better context preservation source.

4. Image Embeddings

Definition: Image embeddings represent images as dense vectors, typically generated by convolutional neural networks (CNNs).

Examples: ResNet, VGG.

Function:

– Facilitate tasks such as image classification, retrieval, and object detection.

– Allow similar images to be clustered together in the embedding space.

Key Characteristics:

– Feature Extraction: CNNs extract meaningful features from images, enabling effective representation in a lower-dimensional space source.

5. Graph Embeddings

Definition: Graph embeddings convert nodes or subgraphs into vector representations while preserving their structural relationships.

Examples: DeepWalk, Node2Vec.

Function:

– Useful in social network analysis, recommendation systems, and link prediction.

– Enable machine learning algorithms to analyze complex networks more effectively.

Key Characteristics:

– Structural Preservation: Maintain the relationships between nodes, allowing for effective representation of connectivity source.

6. Contextual Embeddings

Definition: Contextual embeddings generate different vectors for the same word based on its context in a sentence.

Examples: ELMo, BERT, GPT.

Function:

– Capture nuanced meanings of words depending on their context, significantly improving tasks like sentiment analysis and language translation.

Key Characteristics:

– Dynamic Representation: Unlike static embeddings, these capture variations in meaning, enhancing understanding of polysemous words (words with multiple meanings) source.

7. Multimodal Embeddings

Definition: These embeddings integrate different data types (

The advent of embedding models has significantly transformed the landscape of natural language processing (NLP) and machine learning (ML). These models translate high-dimensional data into dense vector representations, facilitating the understanding and processing of complex information. However, despite their widespread adoption and advancements, several knowledge gaps persist within this domain. This report aims to identify these gaps through a comprehensive review of recent literature on embedding models.

Thesis Statement

While embedding models have made remarkable strides in various applications, critical gaps remain in their theoretical foundations, practical implementations, and ethical considerations. Addressing these gaps is essential for the advancement of embedding technologies and their applications across diverse fields.

Overview of Embedding Models

What Are Embeddings?

Embeddings are numerical representations of data objects (such as words, sentences, or images) in a high-dimensional vector space. They capture semantic relationships and contextual meanings, allowing machines to process and interpret human language more effectively.

- Word Embeddings: Represent individual words as vectors (e.g., Word2Vec, GloVe).

- Sentence Embeddings: Capture meanings of entire sentences (e.g., Universal Sentence Encoder).

- Image Embeddings: Represent images as vectors using convolutional neural networks (CNNs).

- Graph Embeddings: Map nodes in a graph to vectors, preserving structural relationships.

For instance, similar words like “king” and “queen” are represented by vectors that are close together in the embedding space, reflecting their semantic similarity. This capability is crucial for tasks such as clustering, classification, and retrieval in NLP applications (Data Science Dojo).

Recent Advancements and Trends

Recent advancements in embedding technologies have been driven by developments in large language models (LLMs) and benchmarks that evaluate their performance. Key trends include:

- Multilingual Models: Supporting over 1000 languages.

- Domain-Specific Models: Tailored for specialized fields such as medicine and coding.

- Multimodal Models: Integrating text, image, and audio data (Adnan Masood).

However, as the field evolves, it becomes critical to assess the gaps that hinder further progress.

Identified Knowledge Gaps

1. Theoretical Foundations

While many embedding models are widely used, there is a lack of comprehensive understanding regarding their theoretical underpinnings. For example, the mathematical frameworks that govern the relationships between embeddings are often not well articulated. This leads to challenges in:

- Interpretability: Understanding why certain embeddings perform better than others remains elusive.

- Generalizability: How well do these embeddings transfer across different domains and tasks?

2. Practical Implementation Challenges

Despite the proliferation of embedding models, practical implementation issues persist, such as:

- Computational Costs: Large models, such as BERT and GPT, require substantial resources, raising questions about their efficiency in real-world applications (Nayab Hassan).

- Real-Time Processing: The ability to generate embeddings in real-time is still a challenge, particularly for applications that require immediate responses (e.g., chatbots).

3. Ethical Considerations

The embedding models can inadvertently propagate biases found in the training data, which raises ethical concerns. Key issues include:

- Bias and Fairness: Embedding models may encode societal biases, leading to unfair treatment in applications such as hiring or law enforcement (Nayab Hassan).

- Transparency: The opacity of these models complicates accountability and trust in their decisions, particularly in high-stakes scenarios (Medium).

4. Evaluation Metrics

Current benchmarks for evaluating embedding models primarily focus on accuracy and efficiency. However, there are gaps in evaluating:

- Robustness: How well do models perform under adversarial conditions?

- Contextual Understanding: Assessing how embeddings change based on context remains underexplored.

5. Integration with Other Technologies

The integration of embedding models with other AI technologies, such as reinforcement learning or generative adversarial networks (GANs), is an area ripe for

Vyftec – Embedding Models Analysis

Unlock the potential of your text with our expertise in comparing embedding models to find the best fit for your content. Experience Swiss quality solutions tailored to your needs—let’s elevate your projects together!

📧 damian@vyftec.com | 💬 WhatsApp